|

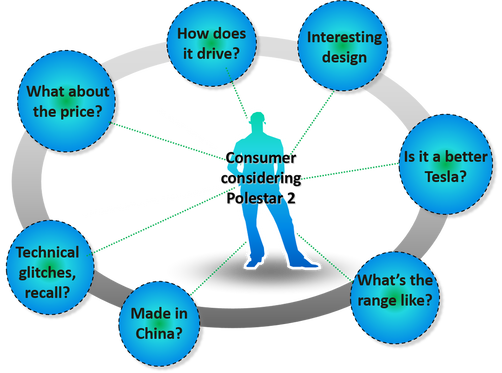

There has been some talk lately about the Polestar 2 and whether it is a credible threat to the Tesla Model 3. As such, I thought I would apply NLP to undertake sentiment analysis on social media feeds to see what real people were saying about it rather than just relying on automotive magazine reviews or the opinion of a few individuals. But first, what is NLP and what is sentiment analysis? NLP or Natural Language Processing can be said to be a subfield of linguistics, computer science and Artificial Intelligence (AI) concerned with the interactions between computers and human language. In particular, how to program computers to process and analyse large amounts of natural language data. Sentiment analysis in simple terms is the use of NLP, text analysis, computational linguistics and biometrics to systematically identify, positive or negative sentiment in free text data. Background to the analysis The Polestar 2 is a new electric car developed by Polestar, a standalone electric offshoot jointly owned by Volvo and its Chinese parent company, Geely. As the flagship product, this is attempting to establish Polestar as a big player in the Electric Vehicle (EV) space and is seen as a direct competitor to the Tesla Model 3. Key questions Vehicle Leasing companies might ask With the drive to electrification gathering pace, I assumed vehicle leasing companies might be keen to know what potential customers are saying about the Polestar 2 and what the implications might be for their business.

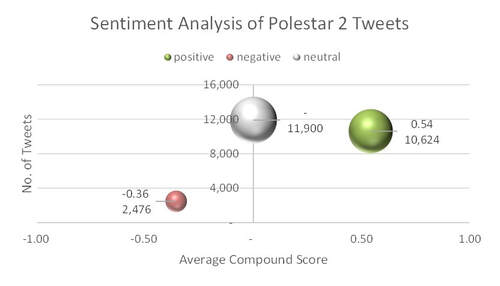

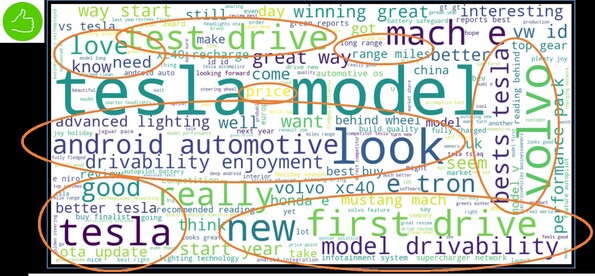

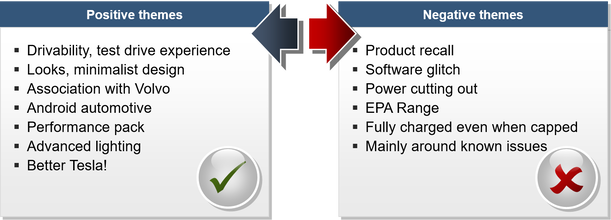

In order to answer these questions, I performed sentiment analysis on a total of 25,000 English tweets related to “Polestar 2”. These were classified as positive, negative or neutral sentiment. I then used word visualisation to bring this to life. Technical stuff I used a range of packages to perform this analysis in Python including Twint to extract tweets, WordCloud for word visualisation and NLTK to clean text (remove stopwords, tokenizing, lemmatizing). And to classify tweets as positive, negative or neutral sentiment I used VADER (Valence Aware Dictionary and sEntiment Reasoner), which performs lexicon and rule based sentiment analysis and is specifically tuned to social media conversations. Based on the actual words used in these tweets, a Compound Score is calculated and assigned to each tweet, ranging from -1 (most negative) to +1 (most positive). Why sentiment analysis is important, particularly on social media Social media conversations provide a wealth of data to help brands understand who is out there, what they’re talking about and what's important to them. And best of all, these are real time conversations! Of the 25,000 tweets, 48% were neutral. With these neutral tweets excluded, the remaining tweets are overwhelmingly positive (81%). In addition, positive tweets are more positive than negative tweets are negative. This has been visualised in the bubble plot below whereby the green bubble representing positive tweets is clearly larger and more to the right, whereas the red bubble representing negative tweets is clearly smaller and not as much to the left. Word visualisation The positive and negative Word visualisations below reveal the themes being discussed in the positive and negative tweets respectively. NOTE: These Word clouds are based on actual tweets. The larger the words, the more frequently they appear in tweets. Themes The positive and negative themes distilled from the analysis are summarised below. Part of the original question raised was whether Tesla should be concerned about the Polestar 2 being a credible threat to the Tesla Model 3. In short, the answer is yes. The positive themes from consumer conversations far outweigh the negatives and the comment ‘better Tesla’ is an interesting one. The negative themes are mainly around known technical issues and these will surely be resolved by the manufacturer sooner rather than later. Value of the approach From this, the value of sentiment analysis on social media conversations is clear. This can be accessed by just about any business to see what their customers or prospects are saying about their brand or products and that of their closest competitors too. Indeed, this approach could be used on a variety of conversational or text data across multiple social channels as well as review sites such as TrustPilot. In essence, if you want to have direct access to consumer conversations about your brand or product and be able to monitor and react to consumer conversations in real time, then this is an area worth exploring. It gives you unfettered access to what is effectively your very own large scale focus group, available on-demand. To harness the power of NLP and sentiment analysis for your brand, please email [email protected] to request your free Discovery consultation where we'll discuss your requirements and formulate a solution that is right for you. Brendan JayagopalFounder & Managing Director. Blue Label Consulting

1 Comment

Model Inventory Effective model governance takes careful planning. At first, a business may be apprehensive about the resources and time required to create a central model repository. However, best practice and regulatory guidance show that developing a model inventory, including the risk assessment and personnel responsible for each model and supporting documentation, can result in better model quality and risk management. With this in mind, a business must carefully consider these questions:

A well-defined Model Inventory will act as a framework for categorising and tracking models, model use, and model changes or updates. This model repository may include information such as:

The Model Inventory process has 3 key stages: Figure 01 - Key Model Inventory Stages Identification First and foremost, define and identify all models. There are regulatory guidelines to help identify models, but a business must settle on its own accepted definition. This will help create uniform standards across the business and provide clear guidelines for all stakeholders. Some processes will categorise clearly as models, others more as complex calculation exercises. The key variable is some form of repeatable calculation process that results in an output in the form of a forecast or estimate that drives a business decision. Once a process meets these 3 criteria, then it can be defined as a model. Model Risk Assessment Each model should be risk rated based on the complexity and financial impact (materiality) of the calculation process. This risk rating will determine the rigour and prioritisation of all governance and risk management activities, including performance monitoring and periodic validation. Elements that should be considered when determining the risk classification may include the models’:

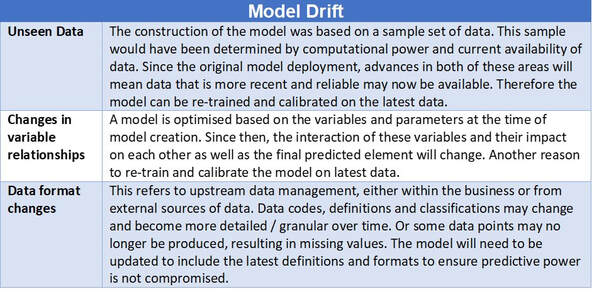

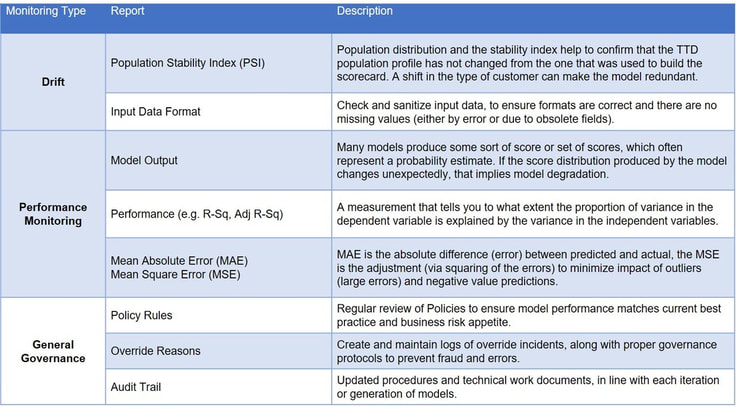

It is important to have a consistent model risk assessment process that is revisited periodically, to ensure consistency, and monitor for changes to risk. Governance – a business should develop and maintain detailed governance guidelines and technical governance work instructions to help establish, implement and maintain a robust model governance framework. This documentation should be stored or linked to the model inventory, to make it easier to identify the model owners who are responsible for all governance related activities. The model parameters, controls and criteria for the use of the output of each model should also be identified, governed and evidenced. In Summary Models are now critical, if not central, to business. Model Governance therefore is key to maintaining control in an ever evolving regulatory landscape. However, to get maximum value from this type of investment companies may need to rethink and fine-tune their strategies including changing culture and processes to deliver the control and governance needed by both Regulation and good business management. As models have to adapt, so will model validation and governance. Therefore, make sure that you review your models and catalogue your model inventory. Then proceed to prioritising your models by importance and materiality. The good news is that you don’t necessarily have to build all this from scratch. There are tools and technologies that you could leverage such as Azure ML or AWS SageMaker, which can be used to automate the end to end model lifecycle, including monitoring and capture of governance data i.e. model audit trail. If you wish to discuss any of the points raised or are interested in finding out how we can help you review or implement your own MRM and Model Governance framework, please contact us here to book your free Discovery consultation. Brendan JayagopalFounder and Managing Director. Blue Label Consulting Model Monitoring – Ongoing Performance and Validation Maintaining models after deployment is one of the key stages of the overall model lifecycle. Once models are deployed, it may be tempting to view the modelling process as complete. In many ways, this is just the beginning. Model monitoring is a key operational stage in the model life cycle, coming after model deployment. After a model is deployed within the business, the next priority is to ensure the most effective use of the model. It is therefore essential to properly monitor the model for as long as it is in use to gain maximum benefit, to stay in control and to maintain an audit trail. The purpose of model monitoring is to ensure that the model is maintaining a predetermined required level of performance but also entails aspects such as prediction errors, process errors and latency. The main reason model monitoring is so important is Model Drift over time. In other words, the overall predictive power of a model gradually degrades over time. This can be caused by several factors, such as: Figure 01 – Model Drift There is also the issue of Concept Drift – whereby the expectations of what constitutes a correct prediction change over time even though the distribution of the input data has not changed. In other words, the relationship between model predictor(s) and the outcome being predicted has changed. This Concept Drift could occur because of changes in:

As can be seen, there are a number of factors that could impact what is defined as a successful prediction from the model. Given this and changing regulatory considerations ongoing model monitoring is not only important for maintaining the validity of the models but also future business success. There is high potential of significant negative impact from model degradation, so an effective model monitoring process will aim to identify and mitigate potential sources of model drift as soon as possible. Figure 02 – Model Monitoring Framework Periodic Review A successful model governance framework will always include a full Periodic Review (PR) and validation of models, regardless of whether or not the model has undergone any change during its operational history. The PR differs from the usual ongoing performance monitoring of the models, in that it is a more holistic total review of the model concept including:

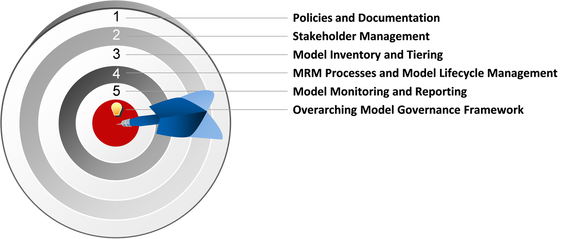

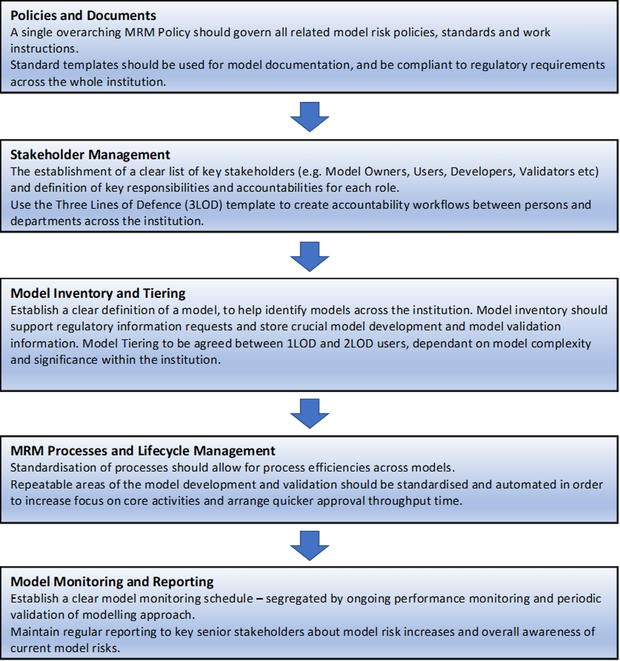

Frequency of performing these PRs should be dependent on the complexity and criticality (material impact) of the model. The higher a model scores on these factors, the more often and more in-depth the PR. In my next article, I will outline the importance of Model Inventory. If you wish to discuss any of the points raised or are interested in finding out how we can help you review or implement your own MRM and Model Governance framework, please contact us here to book your free Discovery consultation. Brendan JayagopalFounder and Managing Director. Blue Label Consulting Although many institutions in the UK are progressing towards attaining a certain standard in MRM practices, the rate of progress is uneven and unfortunately so are the ambition levels. It is critical therefore to start with the correct understanding of the essential building blocks for an effective MRM framework as identified below:

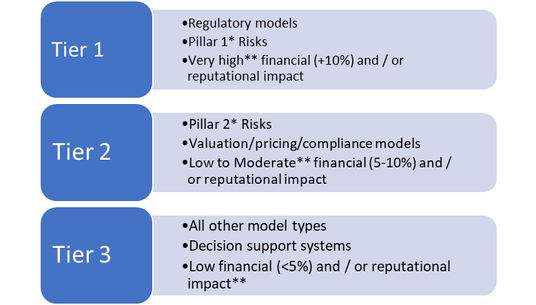

Figure 01 – Buildings blocks of an effective MRM Framework Model Tiering and Risk Classification In order for any institution to have a successful MRM Framework, it will need to have a standardised model tiering procedure. The assigned model Tier will dictate all model related processes:

All models should have an assigned tier / risk classification, based on:

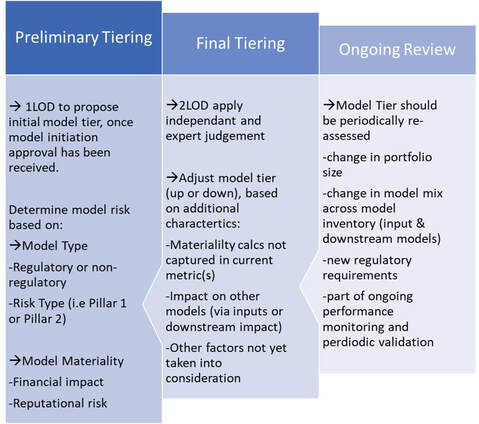

Figure 02 Model Tiers Note(s): *Pillar-1 Risks are Credit, Market & Operational Risk. Pillar-2 risks are residual risks after accounting for Pillar-1 risks- refer BCBS128 for details on Pillar-1 and Pillar-2 risks. **Assess the model materiality, quantitatively and qualitatively. Quantitative Materiality of the models is calculated as the percentage impact on the institutions relevant financial portfolio. Qualitative Materiality is subjective and dependant on both regulatory and customer expectations. The Tiering methodology should be segmented between a ‘Preliminary’ and ‘Final’ decision, and the process will ideally include both 1LOD and 2LOD stakeholders: Figure 03 Model Tiering Methodology Once you have a clear understanding of the framework and have deployed models, it is essential to properly monitor the model for as long as it is in use to gain maximum benefit and stay in control. I will talk about this in my next article. If you wish to discuss any of the points raised or are interested in finding out how we can help you review or implement your own MRM and Model Governance framework, please contact us here to book your free Discovery consultation. Sources: Risk.net MRM report (Risk_MRM_0719.pdf) – slide 9. Deloitte (MRM_Risk_Ldn_01_MRM_Landscape_Sjoerd_K_Deloitte.pdf) – Model risk management building blocks and industry insights, slide 10. Brendan JayagopalFounder and Managing Director. Blue Label Consulting |

brendan jayagopalBrendan launched Blue Label Consulting in 2011. With innovative use of Data through emerging data sciences such as AI and other quantitative methods, he delivers robust analytics and actionable insights to solve business problems. Archives

February 2021

Categories

All

|

Our Services |

Our Clients |

|

RSS Feed

RSS Feed